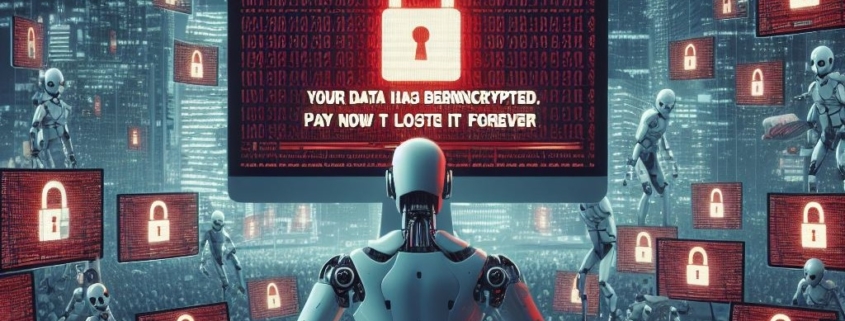

Artificial intelligence Can Exacerbate Ransomware Attacks, Warns UK’s National Cyber Security Center

The National Cyber Security Center released a report stating their findings. According to them, AI removes the entry barrier for hackers who are new to the game. They can easily get into any system and carry out malicious activities without getting caught. Targeting victims will be a piece of cake with AI being available round the clock.

The NCSC claims that the next two years will significantly increase global ransomware threat incidents. Contemporary criminals have created criminal generative AI, more popularly referred to as “GENAI.” They are all set to offer it as a service, for people who can afford it. This service will make it even easier for any layman to enter into office systems and hack them.

Lindy Cameron who is the chief executive at NCSC, urges companies to remain at pace with modern cyber security tools. She emphasizes the importance of using AI productively for risk management on cyber threats.

Ransomware is the most frequent form of cybercrime, with good reason. It offers substantial financial compensation and has a well-established business model. Moreover, with the integration of AI, it’s evident that ransomware attacks are not going anywhere.

The Director General, James Babbage at NSA further ascertains that the released report is factually correct. Criminals will continue exploiting AI for their benefit and businesses must upscale to deal with it. AI increases the speed and abilities of already existing cyberattack schemes. It offers an easy entry point for all kinds of cyber criminals – regardless of their expertise or experience. Babbage also talks about child sexual abuse and fraud – both of which will also be affected as this world advances.

The British Government is strategically working on its cyber security plan. As of the latest reports, £2.6 billion ($3.3 billion) has been invested to…

Listen to this article

Listen to this article