Ensemble averaging deep neural network for botnet detection in heterogeneous Internet of Things devices

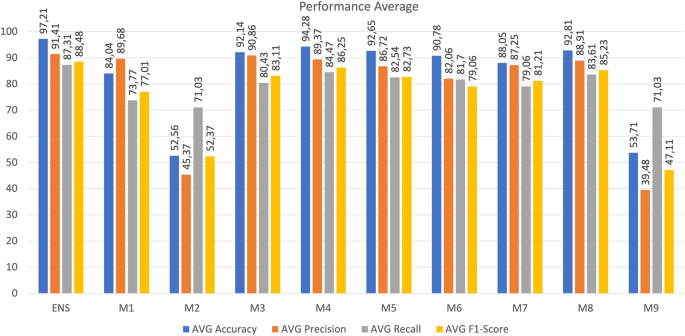

In this section, the results of the simulation modeling and benchmarking study are presented and discussed. The findings of this research are discussed in the context of their impact on ensemble averaging for NIDS in heterogeneous IoT devices. Additionally, potential areas for future research in this field are highlighted.

Experiment environment

This research used a server with the following specifications: Processor 2.3 GHz 16-Core Intel(R) Xeon(R) CPU E5-2650 v3 and 128 GB memory. The operating system used was Ubuntu 22.04.2 LTS. Python version 3.10.6 and Keras version 2.12 were employed as the machine learning library for conducting the DNN experiments. Jupyter notebook version 6.5.3 was used for presenting the experiment and simulation results.

Preliminaries analysis

In this section, the explanation of results from both Scenario 1 and Scenario 2 is provided. The main objective of Scenario 1 was to assess the performance of individual DNN models constructed using device-specific traffic for the purpose of detecting botnet attacks occurring within the traffic of each respective device.

The results of Scenario 1 are presented in Table 7. The findings indicate that the DNN models within each device exhibited robust performance when analyzing the traffic generated by that specific device. Notably, accuracy for each device reached 100%, signifying accurate identification of both true positive and true negative instances of botnet attacks within the corresponding device’s traffic. Precision and recall metrics also demonstrated performance exceeding 99%, implying the models’ ability to minimize misclassifications of normal traffic while accurately recognizing positive instances. Moreover, the DNN models achieved a high F1-score in detecting botnet attacks, highlighting their proficiency in both precision and recall aspects. Both training and prediction times for each model were influenced by dataset volume, with larger datasets leading to longer training and prediction durations. Remarkably, the model size remained consistent at around 70 Kb for each DNN model, indicating a stable size unaffected by variations in training data volume.