The Impacts of AI on Cyber Security Landscape

AI’s newfound accessibility will cause a surge in prompt hacking attempts and private GPT models used for nefarious purposes, a new report revealed.

Experts at the cyber security company Radware forecast the impact that AI will have on the threat landscape in the 2024 Global Threat Analysis Report. It predicted that the number of zero-day exploits and deepfake scams will increase as malicious actors become more proficient with large language models and generative adversarial networks.

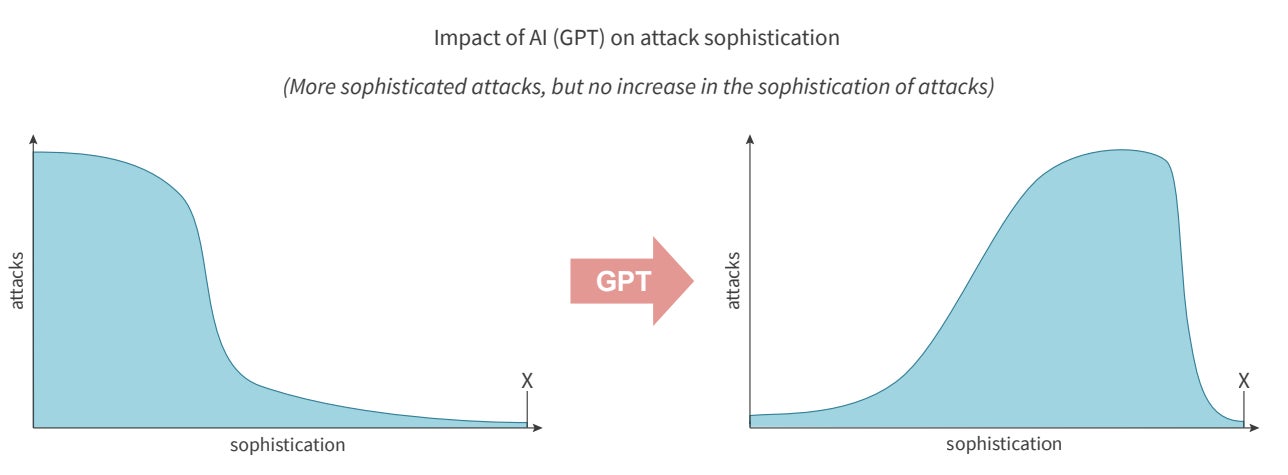

Pascal Geenens, Radware’s director of threat intelligence and the report’s editor, told TechRepublic in an email, “The most severe impact of AI on the threat landscape will be the significant increase in sophisticated threats. AI will not be behind the most sophisticated attack this year, but it will drive up the number of sophisticated threats (Figure A).

“In one axis, we have inexperienced threat actors who now have access to generative AI to not only create new and improve existing attack tools, but also generate payloads based on vulnerability descriptions. On the other axis, we have more sophisticated attackers who can automate and integrate multimodal models into a fully automated attack service and either leverage it themselves or sell it as malware and hacking-as-a-service in underground marketplaces.”

Emergence of prompt hacking

The Radware analysts highlighted “prompt hacking” as an emerging cyberthreat, thanks to the accessibility of AI tools. This is where prompts are inputted into an AI model that force it to perform tasks it was not intended to do and can be exploited by “both well-intentioned users and malicious actors.” Prompt hacking includes both “prompt injections,” where malicious instructions are disguised as benevolent inputs, and “jailbreaking,” where the LLM is instructed to ignore its safeguards.

Prompt injections are listed as the number one security vulnerability on the OWASP Top 10 for LLM Applications. Famous examples of prompt hacks include the “Do Anything Now” or “DAN” jailbreak for ChatGPT that allowed users to bypass its restrictions, and when a…